Croft is a free no-code data mining web application that enables you to pull critical and open-source information on the Internet in just a few clicks. In addition to this you can use the task scheduling features of Croft to stay updated and scrape the data on a regular basis.

All that you need to do is build an Impression and your data is ready to be scraped. Once this process is done, you can export your data in JSON format. Impressions are the configuration elements that are used to identify the details that you wish to extract from any website. Every Impression is described by its name and the URL from the site from where the data has to be scraped.

Let us now see the brief steps of how Croft works.

Working:

1. Click here to navigate to Croft and sign in for a free account using your Google credentials.

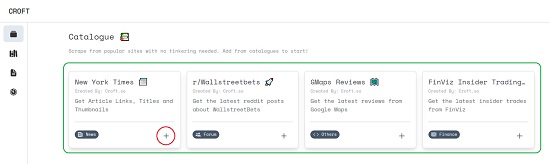

2. You will now be navigated to the Croft Dashboard. Click on the top icon in the toolbar on the left to go to the Catalogue page. These are the popular sites that you can add as an Impression to start data scraping in just 2 clicks.

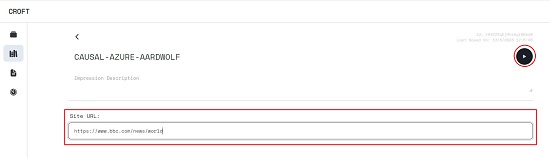

3. Click on the ‘+’ sign at the bottom right of any Catalogue entry and it will be automatically added as an Impression in the corresponding page on the Dashboard. You can clearly view the Site URL from there the data will be scraped.

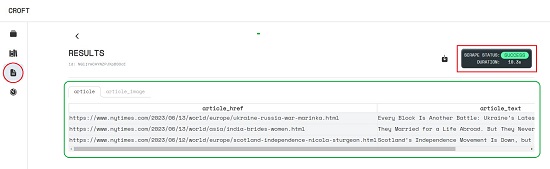

4. To start the process of scraping, click on the ‘Play’ button at the top right of the screen and wait for some time for the process to be completed. Once the scraping is carried out successfully, the scrape status will be shown as ‘Success’ and you will automatically be navigated to the ‘Results’ page of the Dashboard where you can view and analyze the outcomes.

5. To download the data in the JSON format, simply click on the ‘Download’ icon at the top right of the ‘Results’ page.

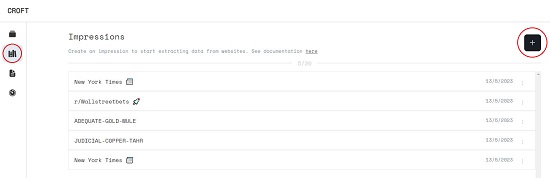

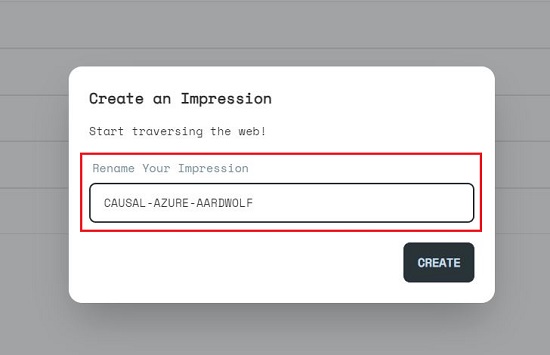

6. To create a new Impression from scratch, click on the ‘Impressions’ icon in the toolbar, and then click on the large ‘+’ sign at the top right of the page. A name will automatically be assigned to the new Impression. Rename it if required and then click on the ‘Create’ button.

7. Type / Paste the Site URL of the site you want to scrape and then like earlier, click on the ‘Play’ button. Once the scraping is done, you will be able to view the output in the ‘Results’ page.

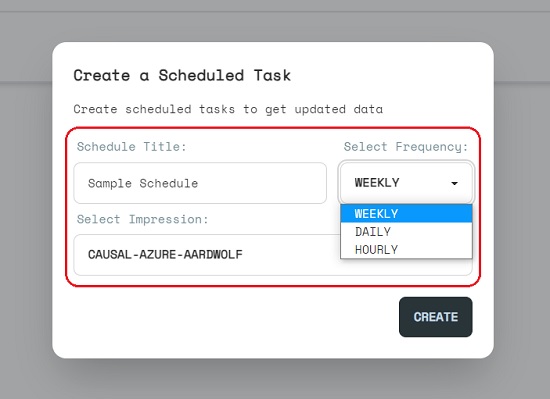

8. Croft also boasts of a Scheduling feature to scrape data from websites. Click on the ‘Schedules’ icon in the toolbar and then click on the ‘+’ sign at the top right. Assign a Scheduling Title, select the Impression from the drop-down list and specify the frequency of data scraping – Hourly, Daily, Weekly. Click on ‘Create’ and the data scraping will be performed based on the schedule that you have specified. The results of the data scraping will be visible in the ‘Results’ page as described earlier.

Closing Comments:

Croft is a light-weight and no-code Notion based tool that you can use to easily pull data and other open-source information on the Internet easily and quickly. It also allows you to schedule your data scraping work on an hourly / daily or weekly basis so that you can automatically remain updated.

Go ahead and try out Croft and let us know what you think. Click here to navigate to Croft. To go through the Croft Documentation, click here.