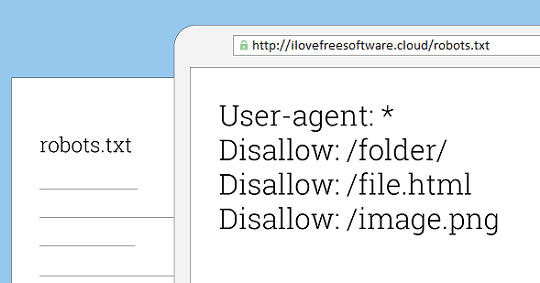

Here are 5 free robots.txt checker websites. These websites take the homepage URL of your website and analyze the robots file. After scanning that, they show the contents of the file along with the errors and warnings if there are any. If the file has valid syntax, then these will simply tell you the file is valid, otherwise they will give suggestions to fix that. Some of these websites can automatically grab the robots.txt file while some of them take the path to fetch the file. And once you have validated the file, you can go through the suggestions that it shows.

Robots.txt plays an important role in allowing search engines to crawl your websites. Here you specify which URL of your website you want to let search engines crawl. Any mistakes in this file may lead to a gradual fall in SERP ranking of your site. But with the help of the websites I have mentioned in this list, you can analyze the “robots.txt” file of your websites and of your competitors. Some of these websites are very powerful and ideal for testing robots.txt file.

5 Free Robots.txt Checker Websites:

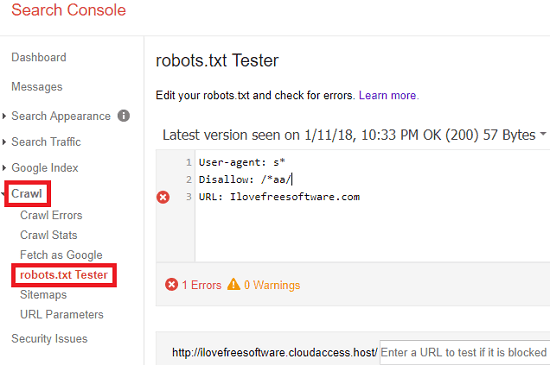

Google Search Console

Google Search Console is probably the most used robots.txt checker tool. However, you can only use this tool if you have your own website or have access to the CPanel of the target website. After adding your website, Google will fetch a lot of details from your site including robots.txt file. It will scan that and if there are any errors, you will know them. Also, if there are minor warnings about the contents of the file. After adding your site in Google Search Console, it will not only show you the robots.txt stats, but you will be able to see some other detailed stats as well.

To use Google Search Console, you need a working Google account. After logging in your Google account you can go to the Search Console using the link above. If you already have a site in it then you are good to go. Go to the Crawl section in Search Console and select “robots.txt checker”. After that, it will fetch the data from the robots file of your website and will show that in the editor. Also, you will see the warning and errors if there are any.

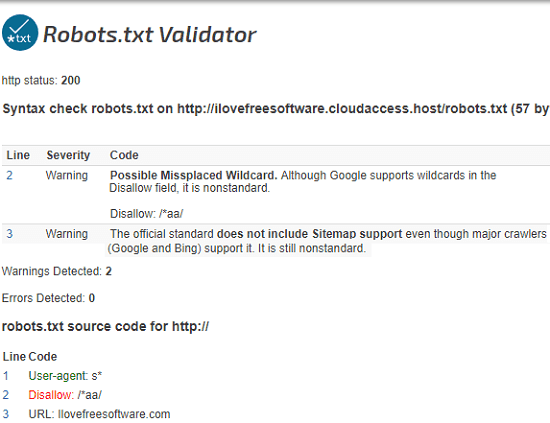

Robots.txt Validator by SEO Chat

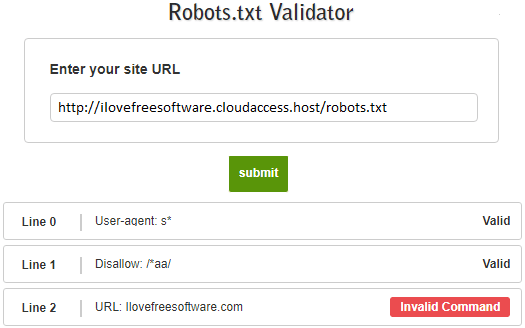

Robots.txt Validator by SEO Chat is one of the best free website to check the robots file of any website. It just uses the URL path to the robots file and then grabs its content. It checks every line from the robots.txt file and shows that to you. If there is anything wrong in that then it will show that and will give you suggestions to fix it. And if everything is fine, then it will just place “valid” label in front of each line. Apart from this tool, this website has a nice collection of other SEO tools that you can use. You can use the tools like keyword suggestion tool, meta tag analyzer, many others.

This is simple website to validate the robots.txt. You don’t even have to create an account here to get started. After reaching this website, simply enter the URL of the website and it will grab the robots.txt file and show its contents. On the interface of website, you can see that in different lines. At the end of each line, it will show the validity status. If there are any errors or warnings in the file, you will know that. If there are any errors, you can fix them as per the suggestions that it recommends.

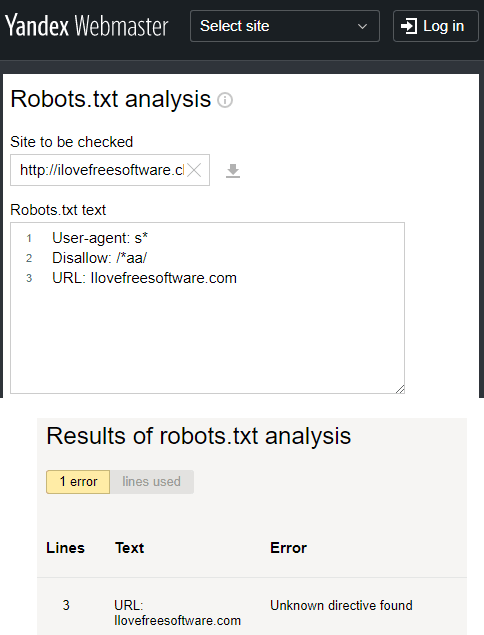

Yandex’s Webmaster Tool

Yandex’s Webmaster Tool is another very good way to validate the robots.txt file of almost any website and fix it. It takes the homepage URL of a target website and automatically fetches the robots file. It shows the contents of the file that you can analyze. You can see if everything is alright in the file or not. If there are some errors in the file, then you will know them and it gives you suggestions to fix them.

Unlike Google, Yandex’s robots.txt checker don’t ask you to login via your account or sign up for that. Just go to its homepage using the above link and then enter the homepage URL of your website there. After that, it will automatically grab the robots.txt file and will show that to you. The warning or errors in the file will be marked and it will also show you suggestions to fix them. This way you can have a highly optimized robots.txt file on your website.

Robots.txt Validator By Dupli Checker

Robots.txt Validator By Dupli Checker is another free robots.txt validator website that you can try. Just like the others it takes the URL path to the robots file on any website and show the results. It grabs the contents of the robots.txt file and places them line by line on the website. And if there are some errors on a specific line, it will show that to you and you can fix them with your relevant knowledge. The tool is very simple and fetches the file quickly for the analysis.

This robots.txt checker website doesn’t ask you to register an account first. Just access it from the URL above and then on the homepage of the tool, specify the URL to the robots file of the target website. It will fetch that and will show the content. Also, if there are any errors on some line, it will show that to you along with the reason. After that, you can fix the issues then re-configure the file on your website.

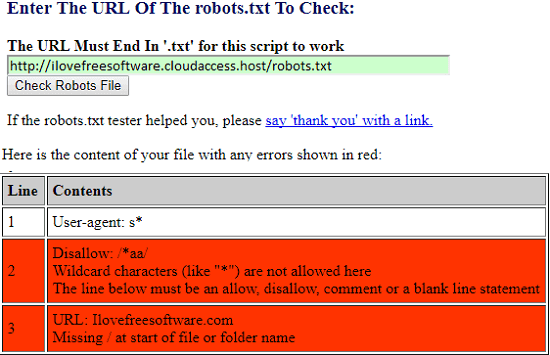

Robots.txt Checker by Search Engine Promotion Help

Robots.txt Checker by Search Engine Promotion Help is the last tool in my list for checking and analyzing robots.txt file of any website. It takes the content of robots.txt file from you and you can also use the URL path to automatically fetch it. After getting the contents of the robots.txt file, it simply puts them on your screen and based on the errors it detected, it highlights those. And not only this, but it also shows the error details so that you can fix them.

You can use this website in the same way that I have explained for other websites. Just go to the homepage of this robots.txt checker and enter the URL to the robots file of a specific website. Or, you can also copy-paste the contents of the robots file manually on the interface of the website. It will scan that and will show you if there are any errors. After analyzing the file, you can do whatever you want.

Closing Thoughts

These are the best free robots.txt checkers which are good for you. Using all these websites, you can easily check robots file of almost any website in just a few seconds. All you have to do is specify the URL to the file and they will automatically fetch the data from that. Also, these websites help to resolve errors and warning if there are any. Out of all these, I think that Google Search Console, Yandex’s Webmaster Tool, and Robots.txt Validator by SEO Chat are the best tools in my list. You can try them and tell me what do you feel about these.