LlamaGPT is a free and self-hosted Llama2 based AI chatbot that you can run on your PC or deploy on a server. It comes as a Docker container that you can quickly deploy on your VPS and chat in the same way as ChatGPT. It offers you a simple UI where it organizes the chats and prompts. You can save your favorite prompts and then use them in any of the chats.

There are various websites where you can try Llama2 for free, but if you want something that can be run locally on your own PC or server, then LlamaGPT is the option. This open-source project can be deployed on capable hardware and it can even run the largest Llama2-70B model as well. The installation and setting up process is very simple that I will explain later in the post.

What is Llama2?

Llama2 is a second-generation of the open-source large language model (LLM) LLama, developed by Meta AI. It is the successor to the Llama 1 that was released earlier in 2023. Llama 2 has been trained on a very large and dense dataset of text and code. It can generate text, translate languages, and write various kinds of creative content.

You can use it as an AI chatbot and it will answer your questions in an informative way. Llama 2 is available for anyone to use, research, and build amazing useful tools with. It comes in various parameter sizes ranging from 7 billion, 13 billion, and 70 billion.

You can download these models and use them to get answers to your questions. But the process can be tricky as the model doesn’t come with the UI. But with LlamaGPT, you get a chatbot like AI for the aforementioned Llama models. Once set up, you can ask unlimited questions and use it for an indefinite time since it is running on your own hardware.

Hardware Requirements for running Llama2 Models via LlamaGPT:

Here is the RAM requirement for running LlamaGPT on a server or personal computer. I will urge you to try running it on a Linux system with 8 GB or more Swap space.

Depending on what model you want to run, here are the RAM requirements.

- 7B Model requires 8GB RAM.

- 13B Model requires 16GB RAM.

- 70B Model requires 40GB RAM.

In addition, you should also have a Good CPU with at least 8 cores so that when you deploy the Docker container of LlamaGPT, it doesn’t make your system unresponsive.

Installing/Deploying LlamaGPT:

To install LlamaGPT, you just need to make sure that you have Docker and Git installed. Most probably if you have a Linux system then Git will be there and you can install Docker using Snapcraft or AUR on Arch Linux.

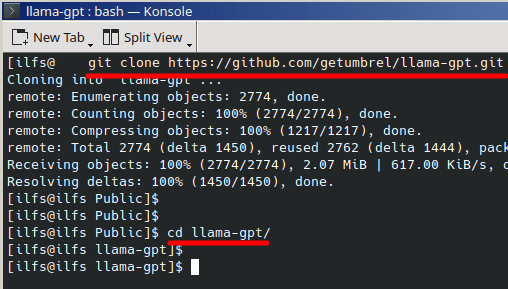

The very first thing you have to do is clone the GitHub repository of LlamaGPT and change the directory to the repo root. Run these commands one after another to do it.

git clone https://github.com/getumbrel/llama-gpt

cd llama-gpt

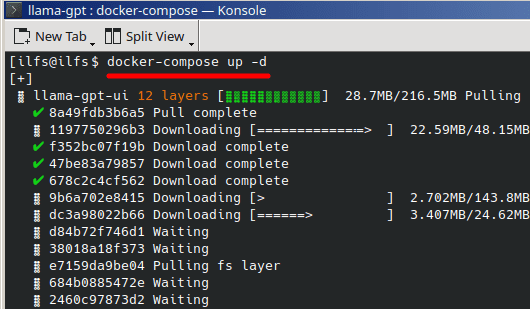

Now, based on the amount of RAM installed, you run the Docker Compose command accordingly. To run the most basic version that will work on most systems, issue the following command.

docker compose up -d

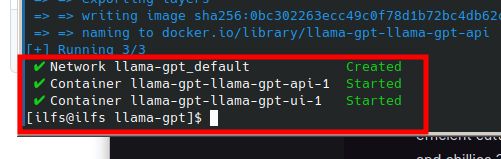

The above command will take some time to set up all the dependencies and download the Llama models. If you have poor internet connection, then it can take up to an hour. Once you see the green status “started”, then you are all ready to use it.

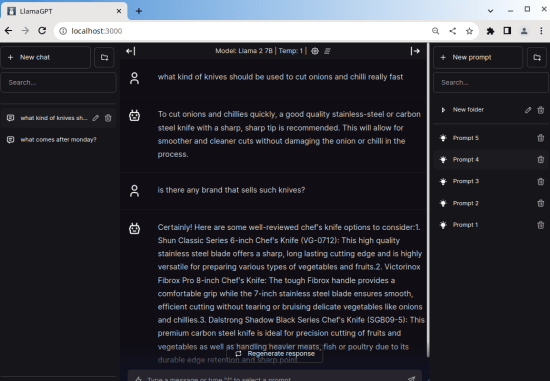

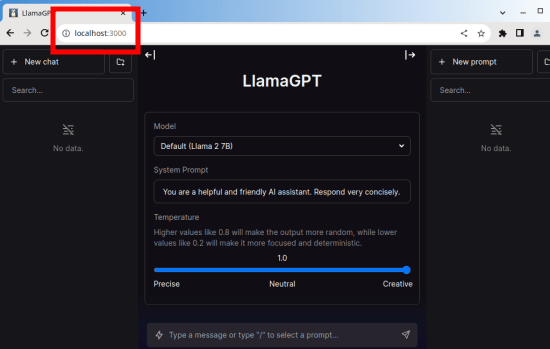

Type localhost:3000 in the address bar of the browser and hit enter. The main homepage of Llama chatbot will show up. You can see this in the screenshot below.

Now at this point, you have successfully installed and setup Llama2 chatbot with a nice UI. In the next section, we will see how to use it to ask questions and get AI generated answers.

Using LlamaGPT:

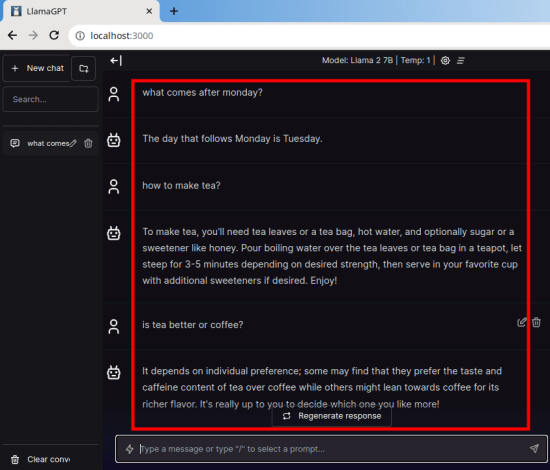

There is no login or sign up. The main interface is right in front of you. You can now start the chat and it will produce the answers. The speed will not be very fast but it will work.

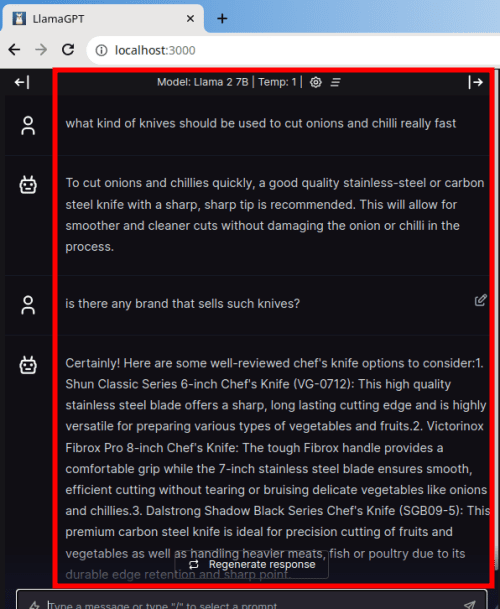

Now create another chat and start asking questions on different subjects. It will understand the intent and then will generate the answers accordingly. You can see that in the screenshot, below, I asked it about kitchen knives, it gave accurate information. This is something that was missing from the earlier version of Llama.

In the left sidebar, you will see all the chats that you have initiated in this software. You can start a new chat anytime and a new entry will be created for you here. You can also delete a chat when you are done with it.

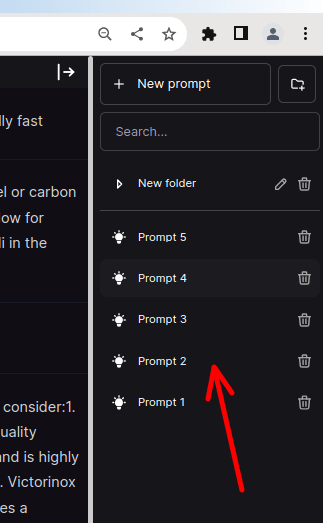

In the right sidebar, you can create different kinds of prompts and then use them during the QA session. This is as simple as that. Just like chats, you can also delete the older saved prompts as well.

In this way, you can use this simple and powerful tool to deploy ChatGPT like UI on any PC or server and chat with the AI model on any topic. The Llama 7B model is good for generating short paragraphs, but for more sophisticated results, try bigger models. But to run those bigger models, you will need higher RAM. For now, it doesn’t seem to be supporting GPU but I hope they add the support in the coming updates.

Closing thoughts:

If you are looking for a way to run any of the Llama2 models on your PC completely offline, then you are at the right place. Just follow the installation guide of LlamaGPT project and have your personal AI chatbot. With this, you will own your data and you can also deploy it on a VPS and then use it from anywhere. I liked the UI where you can specify a system role and manage the chat history just like ChatGPT.